これは、なにをしたくて書いたもの?

- 以前に、OKD(Kubernetes)上にInfinispan ServerをDNSディスカバリーで構築してみた

- この中で動かすPodから、デプロイされたInfinispan Serverにアクセスしたことないなぁと思い、試してみようと

Embedded Cacheなら、同じようなPod間でNode Discoveryすれば済む話ですしね。

Serviceを介してHot Rodプロトコルでアクセスするのが起点になるのでしょうけれど、実際にやってみたらどうなるのだろうと。

Infinispan on OKD(OpenShift)

InfinispanをOKD(OpenShift)上で動かしているような情報は、以下あたりがあります。

Infinispan: Running Infinispan cluster on OpenShift

OpenShift/Kubernetes tutorial - Infinispan

Hot Rodでアクセスするところが書いてあるのは少ないのですが、最初のエントリはふつうにServiceにアクセスするように

RemoteCacheManagerを構成しているように見えます。

こちらを実践してみましょう。

環境

今回の環境は、こちら。

$ minishift version minishift v1.30.0+186b034 $ oc version oc v3.11.0+0cbc58b kubernetes v1.11.0+d4cacc0 features: Basic-Auth GSSAPI Kerberos SPNEGO Server https://192.168.42.144:8443 kubernetes v1.11.0+d4cacc0

Infinispan Serverは、DockerHubにあるイメージを使用することにします。バージョンは、9.4.5.Finalです。

DockerHub / jboss/infinispan-server

構成

Infinispan Serverは、以前に記載したエントリのマニフェスト(YAML)をほぼそのまま利用します(最後にYAMLは載せます)。

Infinispan ServerをOKD/Minishiftにデプロイして、DNSディスカバリーでクラスタを構成する - CLOVER🍀

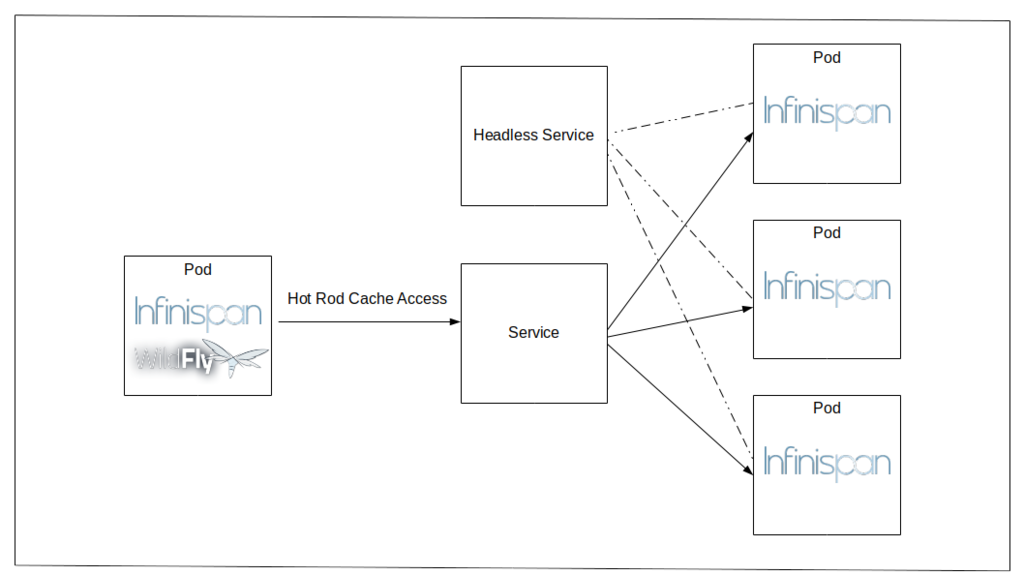

Infinispan Server側は、Infinispan ServerのPodを3つ、あとはService、Headless Serviceを使ってクラスタを構成します。

Hot Rodでアクセスするアプリケーションは、OKD上にデプロイするのでWildFlyで動かすWebアプリケーションとして作ることに

します。

つまり、ざっくりとしたイメージはこんな感じです。

アプリケーションからは、Infinispan Serverのクラスタには、最初にServiceを介してアクセスすることにします。

OKDへのInfinispan Serverのデプロイ

最初に、Infinispan ServerをOKDにデプロイします。

$ oc apply -f infinispan-server.yml

このYAMLには、Infinispan ServerのImageStreamの定義、DeploymentConfig、Service、Headless Service、ConfigMap、

そして今回は使いませんが、Routeの定義が含まれています。

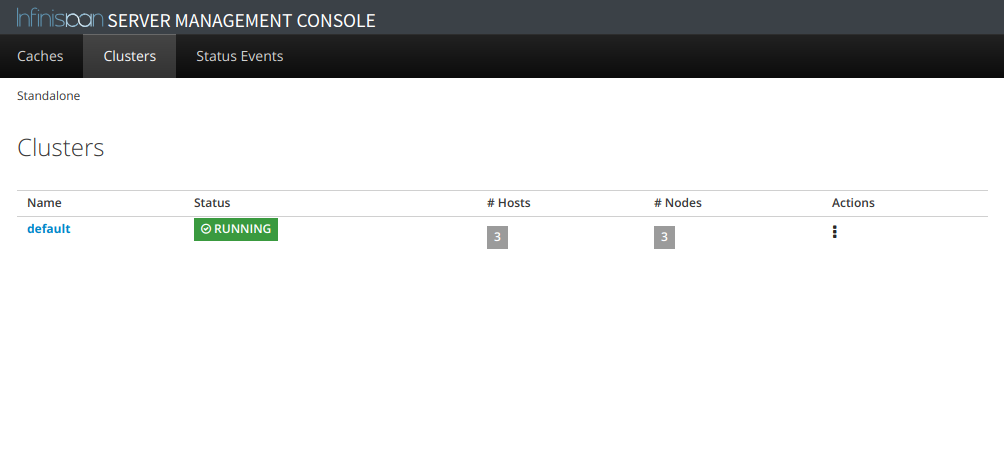

で、これでInfinispan Serverのクラスタが形成されます。

Management Consoleを使うために作ったRouteから見ると、こんな感じ。

アプリケーションを作成する

では、OKDにデプロイするアプリケーションを作成します。簡単な、JAX-RSを使ったアプリケーションにしましょう。

pom.xmlの定義は、こんな感じです。

<packaging>war</packaging> <properties> <project.build.sourceEncoding>UTF-8</project.build.sourceEncoding> <project.reporting.outputEncoding>UTF-8</project.reporting.outputEncoding> <maven.compiler.source>1.8</maven.compiler.source> <maven.compiler.target>1.8</maven.compiler.target> <failOnMissingWebXml>false</failOnMissingWebXml> </properties> <dependencies> <dependency> <groupId>javax</groupId> <artifactId>javaee-web-api</artifactId> <version>7.0</version> <scope>provided</scope> </dependency> <dependency> <groupId>org.infinispan</groupId> <artifactId>infinispan-client-hotrod</artifactId> <version>9.4.5.Final</version> </dependency> </dependencies> <build> <finalName>ROOT</finalName> </build>

JAX-RSの有効化。

src/main/java/org/littlewings/infinispan/okd/JaxrsActivator.java

package org.littlewings.infinispan.okd; import javax.ws.rs.ApplicationPath; import javax.ws.rs.core.Application; @ApplicationPath("") public class JaxrsActivator extends Application { }

RemoteCacheManagerは、CDI管理にしましょう。

src/main/java/org/littlewings/infinispan/okd/RemoteCacheManagerProducer.java

package org.littlewings.infinispan.okd; import javax.enterprise.context.Dependent; import javax.enterprise.inject.Disposes; import javax.enterprise.inject.Produces; import org.infinispan.client.hotrod.RemoteCacheManager; import org.infinispan.client.hotrod.configuration.ConfigurationBuilder; @Dependent public class RemoteCacheManagerProducer { @Produces public RemoteCacheManager remoteCacheManager() { return new RemoteCacheManager( new ConfigurationBuilder() .addServer() .host("infinispan-server") .port(11222) .build() ); } public void destroy(@Disposes RemoteCacheManager remoteCacheManager) { remoteCacheManager.stop(); } }

RemoteCacheManagerの接続先は、Infinispan ServerのServiceを指定しています。

return new RemoteCacheManager( new ConfigurationBuilder() .addServer() .host("infinispan-server") .port(11222) .build() );

この部分だけのYAMLを抜粋すると、こんな感じです。

apiVersion: v1 kind: Service metadata: labels: app: infinispan-server name: infinispan-server spec: ports: - name: 7600-tcp port: 7600 protocol: TCP targetPort: 7600 - name: 8080-tcp port: 8080 protocol: TCP targetPort: 8080 - name: 8181-tcp port: 8181 protocol: TCP targetPort: 8181 - name: 8888-tcp port: 8888 protocol: TCP targetPort: 8888 - name: 9990-tcp port: 9990 protocol: TCP targetPort: 9990 - name: 11211-tcp port: 11211 protocol: TCP targetPort: 11211 - name: 11222-tcp port: 11222 protocol: TCP targetPort: 11222 - name: 57600-tcp port: 57600 protocol: TCP targetPort: 57600 selector: app: infinispan-server deploymentconfig: infinispan-server sessionAffinity: None type: ClusterIP

あとは、RemoteCacheManagerからデフォルトのCacheを使うJAX-RSリソースクラスを定義。

src/main/java/org/littlewings/infinispan/okd/RemoteCacheResource.java

package org.littlewings.infinispan.okd; import java.util.Arrays; import java.util.List; import java.util.Map; import javax.enterprise.context.ApplicationScoped; import javax.inject.Inject; import javax.ws.rs.Consumes; import javax.ws.rs.GET; import javax.ws.rs.POST; import javax.ws.rs.Path; import javax.ws.rs.PathParam; import javax.ws.rs.Produces; import javax.ws.rs.core.MediaType; import org.infinispan.client.hotrod.RemoteCache; import org.infinispan.client.hotrod.RemoteCacheManager; @ApplicationScoped @Path("remote") public class RemoteCacheResource { @Inject RemoteCacheManager remoteCacheManager; @POST @Path("cache") @Consumes(MediaType.APPLICATION_JSON) public String put(Map<String, String> request) { String key = request.get("key"); String value = request.get("value"); RemoteCache<String, String> cache = remoteCacheManager.getCache("default"); cache.put(key, value); return "OK!!"; } @GET @Path("cache/{key}") @Produces(MediaType.TEXT_PLAIN) public String get(@PathParam("key") String key) { RemoteCache<String, String> cache = remoteCacheManager.getCache("default"); return cache.get(key); } @GET @Path("servers") @Produces(MediaType.APPLICATION_JSON) public List<String> servers() { return Arrays.asList(remoteCacheManager.getServers()); } }

現在認識している、Serverの一覧も取得できるようにしてあります。

OKDにデプロイと、Routeの作成。デプロイ時のイメージは、WildFly 13.0.0.Finalとします。

$ oc new-app --name app wildfly:13.0~http://[アプリケーションのGitリポジトリのパス] $ oc expose svc/app

アプリケーション向けのRouteのエンドポイントを確認。

$ oc get route NAME HOST/PORT PATH SERVICES PORT TERMINATION WILDCARD app app-myproject.192.168.42.144.nip.io app 8080-tcp None infinispan-server-hotrod-endpoint infinispan-server-hotrod-endpoint-myproject.192.168.42.144.nip.io infinispan-server 11222-tcp None infinispan-server-management-endpoint infinispan-server-management-endpoint-myproject.192.168.42.144.nip.io infinispan-server 9990-tcp None infinispan-server-memcached-endpoint infinispan-server-memcached-endpoint-myproject.192.168.42.144.nip.io infinispan-server 11211-tcp None infinispan-server-rest-endpoint infinispan-server-rest-endpoint-myproject.192.168.42.144.nip.io infinispan-server 8080-tcp None

サーバーの一覧を、取得してみます。

$ curl app-myproject.192.168.42.144.nip.io/remote/servers ["172.17.0.12:11222","172.17.0.9:11222","172.17.0.11:11222"]

PodのIPアドレスおよびポートが返ってきたみたいです。

PodのIPアドレスを確認してみましょう。

$ oc get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE app-1-build 0/1 Completed 0 2m 172.17.0.6 localhost <none> app-1-v8btv 1/1 Running 0 1m 172.17.0.13 localhost <none> infinispan-server-1-78mvd 1/1 Running 0 55m 172.17.0.12 localhost <none> infinispan-server-1-jm5nt 1/1 Running 0 55m 172.17.0.9 localhost <none> infinispan-server-1-qh2sp 1/1 Running 0 55m 172.17.0.11 localhost <none>

確かに、PodのIPアドレスですね。

この時、アプリケーションが認識したInfinispan Serverの情報をログから見てみましょう。

07:14:10,534 INFO [org.infinispan.client.hotrod.impl.protocol.Codec21] (HotRod-client-async-pool-0) ISPN004006: Server sent new topology view (id=9, age=0) containing 3 addresses: [172.17.0.11:11222, 172.17.0.12:11222, 172.17.0.9:11222] 07:14:10,537 INFO [org.infinispan.client.hotrod.impl.transport.netty.ChannelFactory] (HotRod-client-async-pool-0) ISPN004014: New server added(172.17.0.11:11222), adding to the pool. 07:14:10,540 INFO [org.infinispan.client.hotrod.impl.transport.netty.ChannelFactory] (HotRod-client-async-pool-0) ISPN004014: New server added(172.17.0.12:11222), adding to the pool. 07:14:10,540 INFO [org.infinispan.client.hotrod.impl.transport.netty.ChannelFactory] (HotRod-client-async-pool-0) ISPN004014: New server added(172.17.0.9:11222), adding to the pool. 07:14:10,541 INFO [org.infinispan.client.hotrod.impl.transport.netty.ChannelFactory] (HotRod-client-async-pool-0) ISPN004016: Server not in cluster anymore(infinispan-server:11222), removing from the pool.

3 Node、認識しています。

Serviceの名前で指定したものについては、削除されるみたいですね。

07:14:10,541 INFO [org.infinispan.client.hotrod.impl.transport.netty.ChannelFactory] (HotRod-client-async-pool-0) ISPN004016: Server not in cluster anymore(infinispan-server:11222), removing from the pool.

つまり、この先のアクセスは、Serviceを介さずに直接Podにアクセスする挙動になるような気がします。

あとは、簡単な動作確認。

put。

$ curl -H 'Content-Type: application/json' app-myproject.192.168.42.144.nip.io/remote/cache -d '{"key":"key1", "value":"value1"}'

OK!!

get。

$ curl app-myproject.192.168.42.144.nip.io/remote/cache/key1 value1

OKそうですね。

まとめ

これで、Hot Rodを使ったアプリケーションが使えることが確認できました。

JAX-RSとかを忘れかかっていて、本筋以外のところで若干ハマりましたが、全体としてはそんなに困ったりはしませんでした。

あっさりと動くものですねぇ。

今回作成したソースコードは、こちらに置いています。

https://github.com/kazuhira-r/infinispan-getting-started/tree/master/remote-on-okd

オマケ:Infinispan ServerのYAML定義

OKDにデプロイした、Infinispan ServerのYAML定義は、こちらです。

Infinispan Serverに含まれているcloud.xmlの内容に、DNS_PINGを追加し、使わないPINGを削除したものが設定のベースに

なっています。

infinispan-server.yml

--- ## ConfigMap apiVersion: v1 kind: ConfigMap metadata: name: infinispan-server-configmap data: cloud-custom.xml: | <?xml version='1.0' encoding='UTF-8'?> <server xmlns="urn:jboss:domain:8.0"> <extensions> <extension module="org.infinispan.extension"/> <extension module="org.infinispan.server.endpoint"/> <extension module="org.jboss.as.connector"/> <extension module="org.jboss.as.deployment-scanner"/> <extension module="org.jboss.as.jdr"/> <extension module="org.jboss.as.jmx"/> <extension module="org.jboss.as.logging"/> <extension module="org.jboss.as.naming"/> <extension module="org.jboss.as.remoting"/> <extension module="org.jboss.as.security"/> <extension module="org.jboss.as.transactions"/> <extension module="org.jgroups.extension"/> <extension module="org.wildfly.extension.elytron"/> <extension module="org.wildfly.extension.io"/> </extensions> <management> <security-realms> <security-realm name="ManagementRealm"> <authentication> <local default-user="$local"/> <properties path="mgmt-users.properties" relative-to="jboss.server.config.dir"/> </authentication> <authorization map-groups-to-roles="false"> <properties path="mgmt-groups.properties" relative-to="jboss.server.config.dir"/> </authorization> </security-realm> <security-realm name="ApplicationRealm"> <server-identities> <ssl> <keystore path="application.keystore" relative-to="jboss.server.config.dir" keystore-password="password" alias="server" key-password="password" generate-self-signed-certificate-host="localhost"/> </ssl> </server-identities> <authentication> <local default-user="$local" allowed-users="*"/> <properties path="application-users.properties" relative-to="jboss.server.config.dir"/> </authentication> <authorization> <properties path="application-roles.properties" relative-to="jboss.server.config.dir"/> </authorization> </security-realm> </security-realms> <audit-log> <formatters> <json-formatter name="json-formatter"/> </formatters> <handlers> <file-handler name="file" formatter="json-formatter" relative-to="jboss.server.data.dir" path="audit-log.log"/> </handlers> <logger log-boot="true" enabled="false"> <handlers> <handler name="file"/> </handlers> </logger> </audit-log> <management-interfaces> <http-interface security-realm="ManagementRealm"> <http-upgrade enabled="true"/> <socket-binding http="management-http"/> </http-interface> </management-interfaces> <access-control> <role-mapping> <role name="SuperUser"> <include> <user name="$local"/> </include> </role> </role-mapping> </access-control> </management> <profile> <subsystem xmlns="urn:jboss:domain:logging:3.0"> <console-handler name="CONSOLE"> <level name="INFO"/> <formatter> <named-formatter name="COLOR-PATTERN"/> </formatter> </console-handler> <periodic-rotating-file-handler name="FILE" autoflush="true"> <formatter> <named-formatter name="PATTERN"/> </formatter> <file relative-to="jboss.server.log.dir" path="server.log"/> <suffix value=".yyyy-MM-dd"/> <append value="true"/> </periodic-rotating-file-handler> <size-rotating-file-handler name="HR-ACCESS-FILE" autoflush="true"> <formatter> <named-formatter name="ACCESS-LOG"/> </formatter> <file relative-to="jboss.server.log.dir" path="hotrod-access.log"/> <append value="true"/> <rotate-size value="10M"/> <max-backup-index value="10"/> </size-rotating-file-handler> <size-rotating-file-handler name="REST-ACCESS-FILE" autoflush="true"> <formatter> <named-formatter name="ACCESS-LOG"/> </formatter> <file relative-to="jboss.server.log.dir" path="rest-access.log"/> <append value="true"/> <rotate-size value="10M"/> <max-backup-index value="10"/> </size-rotating-file-handler> <logger category="com.arjuna"> <level name="WARN"/> </logger> <logger category="org.jboss.as.config"> <level name="DEBUG"/> </logger> <logger category="sun.rmi"> <level name="WARN"/> </logger> <logger category="org.infinispan.HOTROD_ACCESS_LOG" use-parent-handlers="false"> <!-- Set to TRACE to enable access logging for hot rod or use DMR --> <level name="INFO"/> <handlers> <handler name="HR-ACCESS-FILE"/> </handlers> </logger> <logger category="org.infinispan.REST_ACCESS_LOG" use-parent-handlers="false"> <!-- Set to TRACE to enable access logging for rest or use DMR --> <level name="INFO"/> <handlers> <handler name="REST-ACCESS-FILE"/> </handlers> </logger> <root-logger> <level name="INFO"/> <handlers> <handler name="CONSOLE"/> <handler name="FILE"/> </handlers> </root-logger> <formatter name="PATTERN"> <pattern-formatter pattern="%d{yyyy-MM-dd HH:mm:ss,SSS} %-5p [%c] (%t) %s%e%n"/> </formatter> <formatter name="COLOR-PATTERN"> <pattern-formatter pattern="%K{level}%d{HH:mm:ss,SSS} %-5p [%c] (%t) %s%e%n"/> </formatter> <formatter name="ACCESS-LOG"> <pattern-formatter pattern="%X{address} %X{user} [%d{dd/MMM/yyyy:HH:mm:ss z}] "%X{method} %m %X{protocol}" %X{status} %X{requestSize} %X{responseSize} %X{duration}%n"/> </formatter> </subsystem> <subsystem xmlns="urn:jboss:domain:deployment-scanner:2.0"> <deployment-scanner path="deployments" relative-to="jboss.server.base.dir" scan-interval="5000" runtime-failure-causes-rollback="${jboss.deployment.scanner.rollback.on.failure:false}"/> </subsystem> <subsystem xmlns="urn:jboss:domain:datasources:5.0"> <datasources> <datasource jndi-name="java:jboss/datasources/ExampleDS" pool-name="ExampleDS" enabled="true" use-java-context="true"> <connection-url>jdbc:h2:mem:test;DB_CLOSE_DELAY=-1;DB_CLOSE_ON_EXIT=FALSE</connection-url> <driver>h2</driver> <security> <user-name>sa</user-name> <password>sa</password> </security> </datasource> <drivers> <driver name="h2" module="com.h2database.h2"> <xa-datasource-class>org.h2.jdbcx.JdbcDataSource</xa-datasource-class> </driver> </drivers> </datasources> </subsystem> <subsystem xmlns="urn:wildfly:elytron:4.0" final-providers="combined-providers" disallowed-providers="OracleUcrypto"> <providers> <aggregate-providers name="combined-providers"> <providers name="elytron"/> <providers name="openssl"/> </aggregate-providers> <provider-loader name="elytron" module="org.wildfly.security.elytron"/> <provider-loader name="openssl" module="org.wildfly.openssl"/> </providers> <audit-logging> <file-audit-log name="local-audit" path="audit.log" relative-to="jboss.server.log.dir" format="JSON"/> </audit-logging> <security-domains> <security-domain name="ApplicationDomain" default-realm="ApplicationRealm" permission-mapper="default-permission-mapper"> <realm name="ApplicationRealm" role-decoder="groups-to-roles"/> <realm name="local"/> </security-domain> <security-domain name="ManagementDomain" default-realm="ManagementRealm" permission-mapper="default-permission-mapper"> <realm name="ManagementRealm" role-decoder="groups-to-roles"/> <realm name="local" role-mapper="super-user-mapper"/> </security-domain> </security-domains> <security-realms> <identity-realm name="local" identity="$local"/> <properties-realm name="ApplicationRealm"> <users-properties path="application-users.properties" relative-to="jboss.server.config.dir" digest-realm-name="ApplicationRealm"/> <groups-properties path="application-roles.properties" relative-to="jboss.server.config.dir"/> </properties-realm> <properties-realm name="ManagementRealm"> <users-properties path="mgmt-users.properties" relative-to="jboss.server.config.dir" digest-realm-name="ManagementRealm"/> <groups-properties path="mgmt-groups.properties" relative-to="jboss.server.config.dir"/> </properties-realm> </security-realms> <mappers> <simple-permission-mapper name="default-permission-mapper" mapping-mode="first"> <permission-mapping> <principal name="anonymous"/> <permission-set name="default-permissions"/> </permission-mapping> <permission-mapping match-all="true"> <permission-set name="login-permission"/> <permission-set name="default-permissions"/> </permission-mapping> </simple-permission-mapper> <constant-realm-mapper name="local" realm-name="local"/> <simple-role-decoder name="groups-to-roles" attribute="groups"/> <constant-role-mapper name="super-user-mapper"> <role name="SuperUser"/> </constant-role-mapper> </mappers> <permission-sets> <permission-set name="login-permission"> <permission class-name="org.wildfly.security.auth.permission.LoginPermission"/> </permission-set> <permission-set name="default-permissions"/> </permission-sets> <http> <http-authentication-factory name="management-http-authentication" security-domain="ManagementDomain" http-server-mechanism-factory="global"> <mechanism-configuration> <mechanism mechanism-name="DIGEST"> <mechanism-realm realm-name="ManagementRealm"/> </mechanism> </mechanism-configuration> </http-authentication-factory> <provider-http-server-mechanism-factory name="global"/> </http> <sasl> <sasl-authentication-factory name="application-sasl-authentication" sasl-server-factory="configured" security-domain="ApplicationDomain"> <mechanism-configuration> <mechanism mechanism-name="JBOSS-LOCAL-USER" realm-mapper="local"/> <mechanism mechanism-name="DIGEST-MD5"> <mechanism-realm realm-name="ApplicationRealm"/> </mechanism> </mechanism-configuration> </sasl-authentication-factory> <sasl-authentication-factory name="management-sasl-authentication" sasl-server-factory="configured" security-domain="ManagementDomain"> <mechanism-configuration> <mechanism mechanism-name="JBOSS-LOCAL-USER" realm-mapper="local"/> <mechanism mechanism-name="DIGEST-MD5"> <mechanism-realm realm-name="ManagementRealm"/> </mechanism> </mechanism-configuration> </sasl-authentication-factory> <configurable-sasl-server-factory name="configured" sasl-server-factory="elytron"> <properties> <property name="wildfly.sasl.local-user.default-user" value="$local"/> </properties> </configurable-sasl-server-factory> <mechanism-provider-filtering-sasl-server-factory name="elytron" sasl-server-factory="global"> <filters> <filter provider-name="WildFlyElytron"/> </filters> </mechanism-provider-filtering-sasl-server-factory> <provider-sasl-server-factory name="global"/> </sasl> </subsystem> <subsystem xmlns="urn:infinispan:server:core:9.4" default-cache-container="clustered"> <cache-container name="clustered" default-cache="default" statistics="true"> <transport lock-timeout="60000"/> <global-state/> <distributed-cache-configuration name="transactional"> <transaction mode="NON_XA" locking="PESSIMISTIC"/> </distributed-cache-configuration> <distributed-cache-configuration name="async" mode="ASYNC"/> <replicated-cache-configuration name="replicated"/> <distributed-cache-configuration name="persistent-file-store"> <persistence> <file-store shared="false" fetch-state="true"/> </persistence> </distributed-cache-configuration> <distributed-cache-configuration name="indexed"> <indexing index="LOCAL" auto-config="true"/> </distributed-cache-configuration> <distributed-cache-configuration name="memory-bounded"> <memory> <binary size="10000000" eviction="MEMORY"/> </memory> </distributed-cache-configuration> <distributed-cache-configuration name="persistent-file-store-passivation"> <memory> <object size="10000"/> </memory> <persistence passivation="true"> <file-store shared="false" fetch-state="true"> <write-behind modification-queue-size="1024" thread-pool-size="1"/> </file-store> </persistence> </distributed-cache-configuration> <distributed-cache-configuration name="persistent-file-store-write-behind"> <persistence> <file-store shared="false" fetch-state="true"> <write-behind modification-queue-size="1024" thread-pool-size="1"/> </file-store> </persistence> </distributed-cache-configuration> <distributed-cache-configuration name="persistent-rocksdb-store"> <persistence> <rocksdb-store shared="false" fetch-state="true"/> </persistence> </distributed-cache-configuration> <distributed-cache-configuration name="persistent-jdbc-string-keyed"> <persistence> <string-keyed-jdbc-store datasource="java:jboss/datasources/ExampleDS" fetch-state="true" preload="false" purge="false" shared="false"> <string-keyed-table prefix="ISPN"> <id-column name="id" type="VARCHAR"/> <data-column name="datum" type="BINARY"/> <timestamp-column name="version" type="BIGINT"/> </string-keyed-table> <write-behind modification-queue-size="1024" thread-pool-size="1"/> </string-keyed-jdbc-store> </persistence> </distributed-cache-configuration> <distributed-cache name="default"/> <replicated-cache name="repl" configuration="replicated"/> </cache-container> </subsystem> <subsystem xmlns="urn:infinispan:server:endpoint:9.4"> <hotrod-connector socket-binding="hotrod" cache-container="clustered"> <topology-state-transfer lazy-retrieval="false" lock-timeout="1000" replication-timeout="5000"/> </hotrod-connector> <rest-connector socket-binding="rest" cache-container="clustered"> <authentication security-realm="ApplicationRealm" auth-method="BASIC"/> </rest-connector> </subsystem> <subsystem xmlns="urn:infinispan:server:jgroups:9.4"> <channels default="cluster"> <channel name="cluster"/> </channels> <stacks default="${jboss.default.jgroups.stack:dns}"> <stack name="dns"> <transport type="TCP" socket-binding="jgroups-tcp"> <property name="logical_addr_cache_expiration">360000</property> </transport> <protocol type="dns.DNS_PING"> <property name="dns_query">${dns.ping.name:infinispan-server-ping}</property> </protocol> <protocol type="MERGE3"/> <protocol type="FD_SOCK" socket-binding="jgroups-tcp-fd"/> <protocol type="FD_ALL"/> <protocol type="VERIFY_SUSPECT"/> <protocol type="pbcast.NAKACK2"> <property name="use_mcast_xmit">false</property> </protocol> <protocol type="UNICAST3"/> <protocol type="pbcast.STABLE"/> <protocol type="pbcast.GMS"/> <protocol type="MFC"/> <protocol type="FRAG3"/> </stack> <stack name="kubernetes"> <transport type="TCP" socket-binding="jgroups-tcp"> <property name="logical_addr_cache_expiration">360000</property> </transport> <protocol type="kubernetes.KUBE_PING"/> <protocol type="MERGE3"/> <protocol type="FD_SOCK" socket-binding="jgroups-tcp-fd"/> <protocol type="FD_ALL"/> <protocol type="VERIFY_SUSPECT"/> <protocol type="pbcast.NAKACK2"> <property name="use_mcast_xmit">false</property> </protocol> <protocol type="UNICAST3"/> <protocol type="pbcast.STABLE"/> <protocol type="pbcast.GMS"/> <protocol type="MFC"/> <protocol type="FRAG3"/> </stack> </stacks> </subsystem> <subsystem xmlns="urn:jboss:domain:io:3.0"> <worker name="default" io-threads="2" task-max-threads="2"/> <buffer-pool name="default"/> </subsystem> <subsystem xmlns="urn:jboss:domain:jca:5.0"> <archive-validation enabled="true" fail-on-error="true" fail-on-warn="false"/> <bean-validation enabled="true"/> <default-workmanager> <short-running-threads> <core-threads count="50"/> <queue-length count="50"/> <max-threads count="50"/> <keepalive-time time="10" unit="seconds"/> </short-running-threads> <long-running-threads> <core-threads count="50"/> <queue-length count="50"/> <max-threads count="50"/> <keepalive-time time="10" unit="seconds"/> </long-running-threads> </default-workmanager> <cached-connection-manager/> </subsystem> <subsystem xmlns="urn:jboss:domain:jdr:1.0"/> <subsystem xmlns="urn:jboss:domain:jmx:1.3"> <expose-resolved-model/> <expose-expression-model/> <remoting-connector/> </subsystem> <subsystem xmlns="urn:jboss:domain:naming:2.0"> <remote-naming/> </subsystem> <subsystem xmlns="urn:jboss:domain:remoting:4.0"> <http-connector name="http-remoting-connector" connector-ref="default" security-realm="ApplicationRealm"/> </subsystem> <subsystem xmlns="urn:jboss:domain:security:2.0"> <security-domains> <security-domain name="other" cache-type="default"> <authentication> <login-module code="Remoting" flag="optional"> <module-option name="password-stacking" value="useFirstPass"/> </login-module> <login-module code="RealmDirect" flag="required"> <module-option name="password-stacking" value="useFirstPass"/> </login-module> </authentication> </security-domain> <security-domain name="jboss-web-policy" cache-type="default"> <authorization> <policy-module code="Delegating" flag="required"/> </authorization> </security-domain> <security-domain name="jaspitest" cache-type="default"> <authentication-jaspi> <login-module-stack name="dummy"> <login-module code="Dummy" flag="optional"/> </login-module-stack> <auth-module code="Dummy"/> </authentication-jaspi> </security-domain> <security-domain name="jboss-ejb-policy" cache-type="default"> <authorization> <policy-module code="Delegating" flag="required"/> </authorization> </security-domain> </security-domains> </subsystem> <subsystem xmlns="urn:jboss:domain:transactions:5.0"> <core-environment node-identifier="${jboss.tx.node.id:1}"> <process-id> <uuid/> </process-id> </core-environment> <recovery-environment socket-binding="txn-recovery-environment" status-socket-binding="txn-status-manager"/> <object-store path="tx-object-store" relative-to="jboss.server.data.dir"/> </subsystem> </profile> <interfaces> <interface name="management"> <inet-address value="${jboss.bind.address.management:127.0.0.1}"/> </interface> <interface name="public"> <inet-address value="${jboss.bind.address:127.0.0.1}"/> </interface> </interfaces> <socket-binding-group name="standard-sockets" default-interface="public" port-offset="${jboss.socket.binding.port-offset:0}"> <socket-binding name="management-http" interface="management" port="${jboss.management.http.port:9990}"/> <socket-binding name="management-https" interface="management" port="${jboss.management.https.port:9993}"/> <socket-binding name="hotrod" port="11222"/> <socket-binding name="hotrod-internal" port="11223"/> <socket-binding name="hotrod-multi-tenancy" port="11224"/> <socket-binding name="jgroups-mping" port="0" multicast-address="${jboss.default.multicast.address:234.99.54.14}" multicast-port="45700"/> <socket-binding name="jgroups-tcp" port="7600"/> <socket-binding name="jgroups-tcp-fd" port="57600"/> <socket-binding name="jgroups-udp" port="55200" multicast-address="${jboss.default.multicast.address:234.99.54.14}" multicast-port="45688"/> <socket-binding name="jgroups-udp-fd" port="54200"/> <socket-binding name="memcached" port="11211"/> <socket-binding name="rest" port="8080"/> <socket-binding name="rest-multi-tenancy" port="8081"/> <socket-binding name="rest-ssl" port="8443"/> <socket-binding name="txn-recovery-environment" port="4712"/> <socket-binding name="txn-status-manager" port="4713"/> <outbound-socket-binding name="remote-store-hotrod-server"> <remote-destination host="remote-host" port="11222"/> </outbound-socket-binding> <outbound-socket-binding name="remote-store-rest-server"> <remote-destination host="remote-host" port="8080"/> </outbound-socket-binding> </socket-binding-group> </server> --- ## ImageStream apiVersion: v1 kind: ImageStream metadata: labels: app: infinispan-server name: infinispan-server spec: lookupPolicy: local: false tags: - from: kind: DockerImage name: jboss/infinispan-server:9.4.5.Final name: 9.4.5.Final referencePolicy: type: Source --- ## DeploymentConfig apiVersion: v1 kind: DeploymentConfig metadata: labels: app: infinispan-server name: infinispan-server spec: replicas: 3 selector: app: infinispan-server deploymentconfig: infinispan-server strategy: type: Rolling template: metadata: labels: app: infinispan-server deploymentconfig: infinispan-server spec: containers: - image: jboss/infinispan-server:latest name: infinispan-server ports: - containerPort: 8181 protocol: TCP - containerPort: 8888 protocol: TCP - containerPort: 9990 protocol: TCP - containerPort: 11211 protocol: TCP - containerPort: 11222 protocol: TCP - containerPort: 57600 protocol: TCP - containerPort: 7600 protocol: TCP - containerPort: 8080 protocol: TCP env: - name: MGMT_USER value: ispn-admin - name: MGMT_PASS value: admin-password - name: APP_USER value: ispn-user - name: APP_PASS value: user-password args: - -c - custom/cloud.xml volumeMounts: - name: config mountPath: /opt/jboss/infinispan-server/standalone/configuration/custom volumes: - name: config configMap: name: infinispan-server-configmap items: - key: cloud-custom.xml path: cloud.xml restartPolicy: Always triggers: - type: ConfigChange - imageChangeParams: automatic: true containerNames: - infinispan-server from: kind: ImageStreamTag name: infinispan-server:9.4.5.Final type: ImageChange --- ## Service apiVersion: v1 kind: Service metadata: labels: app: infinispan-server name: infinispan-server spec: ports: - name: 7600-tcp port: 7600 protocol: TCP targetPort: 7600 - name: 8080-tcp port: 8080 protocol: TCP targetPort: 8080 - name: 8181-tcp port: 8181 protocol: TCP targetPort: 8181 - name: 8888-tcp port: 8888 protocol: TCP targetPort: 8888 - name: 9990-tcp port: 9990 protocol: TCP targetPort: 9990 - name: 11211-tcp port: 11211 protocol: TCP targetPort: 11211 - name: 11222-tcp port: 11222 protocol: TCP targetPort: 11222 - name: 57600-tcp port: 57600 protocol: TCP targetPort: 57600 selector: app: infinispan-server deploymentconfig: infinispan-server sessionAffinity: None type: ClusterIP --- ## Service Ping apiVersion: v1 kind: Service metadata: labels: app: infinispan-server name: infinispan-server-ping spec: ports: - name: 7600-tcp port: 7600 protocol: TCP targetPort: 7600 - name: 8080-tcp port: 8080 protocol: TCP targetPort: 8080 - name: 8181-tcp port: 8181 protocol: TCP targetPort: 8181 - name: 8888-tcp port: 8888 protocol: TCP targetPort: 8888 - name: 9990-tcp port: 9990 protocol: TCP targetPort: 9990 - name: 11211-tcp port: 11211 protocol: TCP targetPort: 11211 - name: 11222-tcp port: 11222 protocol: TCP targetPort: 11222 - name: 57600-tcp port: 57600 protocol: TCP targetPort: 57600 selector: app: infinispan-server deploymentconfig: infinispan-server sessionAffinity: None type: ClusterIP clusterIP: None --- ## Route 8080 apiVersion: v1 kind: Route metadata: labels: app: infinispan-server name: infinispan-server-rest-endpoint spec: port: targetPort: 8080-tcp to: kind: Service name: infinispan-server --- ## Route 9990 apiVersion: v1 kind: Route metadata: labels: app: infinispan-server name: infinispan-server-management-endpoint spec: port: targetPort: 9990-tcp to: kind: Service name: infinispan-server --- ## Route 11211 apiVersion: v1 kind: Route metadata: labels: app: infinispan-server name: infinispan-server-memcached-endpoint spec: port: targetPort: 11211-tcp to: kind: Service name: infinispan-server --- ## Route 11222 apiVersion: v1 kind: Route metadata: labels: app: infinispan-server name: infinispan-server-hotrod-endpoint spec: port: targetPort: 11222-tcp to: kind: Service name: infinispan-server